The post 60 students participated in the ACM Europe Summer School dedicated to HPC Computer Architectures first appeared on RISC2 Project.

]]>The RISC2 project was also represented, having made it possible, through monetary support, for some students from Latin America to take part – thus continuing its work in the social field. In total, 60 students from 25 different nationalities took part in the initiative.

The post 60 students participated in the ACM Europe Summer School dedicated to HPC Computer Architectures first appeared on RISC2 Project.

]]>The post HPC, Data & Architecture Week first appeared on RISC2 Project.

]]>All the videos are available here:

Sergio Nesmachnow

- Class 1

- Class 2

- Class 3

- Class 4

Pablo Ezzati

- Class 1

- Class 2

Esteban Meneses

- Class 1

- Class 2

- Class 3

The post HPC, Data & Architecture Week first appeared on RISC2 Project.

]]>The post Hypatia first appeared on RISC2 Project.

]]>- Master Node: 1 PowerEdge R640 Server: 2 x Intel® Xeon® Silver 4210R 2.4G, 10C/20T, 9.6GT/s, 13.75M Cache, Turbo, HT (100W) DDR4-2400. Mellanox ConnectX-6 Single Port HDR100 QSFP56 Infiniband Adapter

- Compute Node:

- 10 PowerEdge R640 Server: 2 x Intel® Xeon® Gold 6242R 3.1G, 20C/40T, 10.4GT/s, 27.5M Cache, Turbo, HT (205W) DDR4-2933. Mellanox ConnectX-6 Single Port HDR100 QSFP56 Infiniband Adapter

- 3 PowerEdge R6525 Server 256 GB: 2 x AMD EPYC 7402 2.80GHz, 24C/48T, 128M Cache (180W) DDR4-3200. Mellanox ConnectX-6 Single Port HDR100 QSFP56 Infiniband Adapter

- 2 PowerEdge R6525 Server 512 GB: 2 x AMD EPYC 7402 2.80GHz, 24C/48T, 128M Cache (180W) DDR4-3200. Mellanox ConnectX-6 Single Port HDR100 QSFP56 Infiniband Adapter

- 1 PowerEdge R6525 Server 1 TB: 2 x AMD EPYC 7402 2.80GHz, 24C/48T, 128M Cache (180W) DDR4-3200. Mellanox ConnectX-6 Single Port HDR100 QSFP56 Infiniband Adapter

- 2 PowerEdge R740 Server: 3 x NVIDIA® Quadro® RTX6000 24 GB, 250W, Dual Slot, PCle x16 Passice Cooled, Full Height GPU. Intel® Xeon® Gold 6226R 2.9GHz, 16C/32T, 10.4GT/s, 22M Cache, Turbo, HT (150W) DDR4-2933. Mellanox ConnectX-6 Single Port HDR100 QSFP56 Infiniband Adapter

- Storage:

- 1Dell EMC ME4084 SAS OST – 84 X 4TB HDD 7.2K 512n SAS12 3.5

- 1 Dell EMC ME4024 SAS MDT – 24 X 960 GB SSD SAS Read Intensive 12Gbps 512e 2.5in Hot-plug Drive, PM5-R, 1DWPD, 1752 TBW

- 4 PowerEdge R740 Server: 2 x Intel® Xeon® Gold 6230R 2.1G, 26C/52T, 10.4GT/s, 35.75M Cache, Turbo, HT 150W) DDR4-2933. Mellanox ConnectX-6 Single Port HDR100 QSFP56 Infiniband Adapter

The post Hypatia first appeared on RISC2 Project.

]]>The post Subsequent Progress And Challenges Concerning The México-UE Project ENERXICO: Supercomputing And Energy For México first appeared on RISC2 Project.

]]>The ENERXICO Project focused on developing advanced simulation software solutions for oil & gas, wind energy and transportation powertrain industries. The institutions that collaborated in the project are for México: ININ (Institution responsible for México), Centro de Investigación y de Estudios Avanzados del IPN (Cinvestav), Universidad Nacional Autónoma de México (UNAM IINGEN, FCUNAM), Universidad Autónoma Metropolitana-Azcapotzalco, Instituto Mexicano del Petróleo, Instituto Politécnico Nacional (IPN) and Pemex, and for the European Union: Centro de Supercómputo de Barcelona (Institution responsible for the EU), Technische Universitäts München, Alemania (TUM), Universidad de Grenoble Alpes, Francia (UGA), CIEMAT, España, Repsol, Iberdrola, Bull, Francia e Universidad Politécnica de Valencia, España.

The Project contemplated four working packages (WP):

WP1 Exascale Enabling: This was a cross-cutting work package that focused on assessing performance bottlenecks and improving the efficiency of the HPC codes proposed in vertical WP (UE Coordinator: BULL, MEX Coordinator: CINVESTAV-COMPUTACIÓN);

WP2 Renewable energies: This WP deployed new applications required to design, optimize and forecast the production of wind farms (UE Coordinator: IBR, MEX Coordinator: ININ);

WP3 Oil and gas energies: This WP addressed the impact of HPC on the entire oil industry chain (UE Coordinator: REPSOL, MEX Coordinator: ININ);

WP4 Biofuels for transport: This WP displayed advanced numerical simulations of biofuels under conditions similar to those of an engine (UE Coordinator: UPV-CMT, MEX Coordinator: UNAM);

For WP1 the following codes were optimized for exascale computers: Alya, Bsit, DualSPHysics, ExaHyPE, Seossol, SEM46 and WRF.

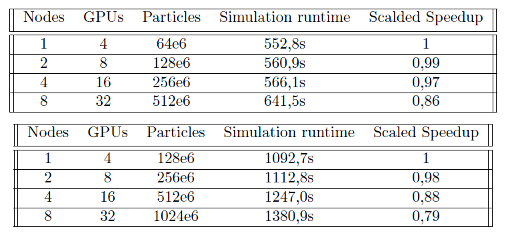

As an example, we present some of the results for the DualPHYysics code. We evaluated two architectures: The first set of hardware used were identical nodes, each equipped with 2 ”Intel Xeon Gold 6248 Processors”, clocking at 2.5 GHz with about 192 GB of system memory. Each node contained 4 Nvidia V100 Tesla GPUs with 32 GB of main memory each. The second set of hardware used were identical nodes, each equipped with 2 ”AMD Milan 7763 Processors”, clocking at 2.45 GHz with about 512 GB of system memory. Each node contained 4 Nvidia V100 Ampere GPUs with 40 GB of main memory each. The code was compiled and linked with CUDA 10.2 and OpenMPI 4. The application was executed using one GPU per MPI rank.

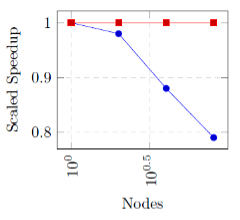

In Figures 1 and 2 we show the scalability of the code for the strong and weak scaling tests that indicate that the scaling is very good. Motivated by these excellent results, we are in the process of performing in the LUMI supercomputer new SPH simulations with up to 26,834 million particles that will be run with up to 500 GPUs, which is 53.7 million particles per GPU. These simulations will be done initially for a Wave Energy Converter (WEC) Farm (see Figure 3), and later for turbulent models.

Figure 1. Strong scaling test with a fix number of particles but increasing number of GPUs.

Figure 2. Weak scaling test with increasing number of particles and GPUs.

Figure 3. Wave Energy Converter (WEC) Farm (taken from https://corpowerocean.com/)

As part of WP3, ENERXICO developed a first version of a computer code called Black Hole (or BH code) for the numerical simulation of oil reservoirs, based on the numerical technique known as Smoothed Particle Hydrodynamics or SPH. This new code is an extension of the DualSPHysics code (https://dual.sphysics.org/) and is the first SPH based code that has been developed for the numerical simulation of oil reservoirs and has important benefits versus commercial codes based on other numerical techniques.

The BH code is a large-scale massively parallel reservoir simulator capable of performing simulations with billions of “particles” or fluid elements that represent the system under study. It contains improved multi-physics modules that automatically combine the effects of interrelated physical and chemical phenomena to accurately simulate in-situ recovery processes. This has led to the development of a graphical user interface, considered as a multiple-platform application for code execution and visualization, and for carrying out simulations with data provided by industrial partners and performing comparisons with available commercial packages.

Furthermore, a considerable effort is presently being made to simplify the process of setting up the input for reservoir simulations from exploration data by means of a workflow fully integrated in our industrial partners’ software environment. A crucial part of the numerical simulations is the equation of state. We have developed an equation of state based on crude oil data (the so-called PVT) in two forms, the first as a subroutine that is integrated into the code, and the second as an interpolation subroutine of properties’ tables that are generated from the equation of state subroutine.

An oil reservoir is composed of a porous medium with a multiphase fluid made of oil, gas, rock and other solids. The aim of the code is to simulate fluid flow in a porous medium, as well as the behaviour of the system at different pressures and temperatures. The tool should allow the reduction of uncertainties in the predictions that are carried out. For example, it may answer questions about the benefits of injecting a solvent, which could be CO2, nitrogen, combustion gases, methane, etc. into a reservoir, and the times of eruption of the gases in the production wells. With these estimates, it can take the necessary measures to mitigate their presence, calculate the expense, the pressure to be injected, the injection volumes and most importantly, where and for how long. The same happens with more complex processes such as those where fluids, air or steam are injected, which interact with the rock, oil, water and gas present in the reservoir. The simulator should be capable of monitoring and preparing measurement plans.

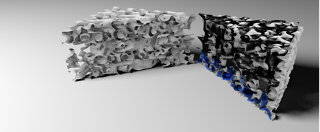

In order to be able to perform a simulation of a reservoir oil field, an initial model needs to be created. Using geophysical forward and inverse numerical techniques, the ENERXICO project evaluated novel, high-performance simulation packages for challenging seismic exploration cases that are characterized by extreme geometric complexity. Now, we are undergoing an exploration of high-order methods based upon fully unstructured tetrahedral meshes and also tree-structured Cartesian meshes with adaptive mesh refinement (AMR) for better spatial resolution. Using this methodology, our packages (and some commercial packages) together with seismic and geophysical data of naturally fractured reservoir oil fields, are able to create the geometry (see Figure 4), and exhibit basic properties of the oil reservoir field we want to study. A number of numerical simulations are performed and from these oil fields exploitation scenarios are generated.

Figure 4. A detail of the initial model for a SPH simulation of a porous medium.

More information about the ENERXICO Project can be found in: https://enerxico-project.eu/

By: Jaime Klapp (ININ, México) and Isidoro Gitler (Cinvestav, México)

The post Subsequent Progress And Challenges Concerning The México-UE Project ENERXICO: Supercomputing And Energy For México first appeared on RISC2 Project.

]]>The post More than 100 students participated in the HPC, Data & Architecture Week first appeared on RISC2 Project.

]]>This event had four main courses: “Foundations of Parallel Programming”, “Large scale data processing and machine learning”, “New architectures and specific computing platforms”, and “Administrations techniques for large-scale computing facilities”.

More than 100 students actively participated in the event who traveled from different part of the country. 30 students received financial support to participate (traveling and living) provided by the National HPC System (SNCAD) dependent of the Argentina’s Ministry of Science.

Esteban Mocskos, one of the organizers of the event, believes “this kind of events should be organized regularly to sustain the flux of students in the area of HPC”. In his opinion, “a lot of students from Argentina get their first contact with HPC topics. As such a large country, impacting a distant region also means impacting the neighboring countries. Those students will bring their experience to other students in their places”. According to Mocskos, initiatives like the “HPC, Data & Architecture Week” spark a lot of collaborations.

The post More than 100 students participated in the HPC, Data & Architecture Week first appeared on RISC2 Project.

]]>

The post ACM Europe Summer School on HPC Computer Architectures for AI and Dedicated Applications first appeared on RISC2 Project.

]]>

The post ACM Europe Summer School on HPC Computer Architectures for AI and Dedicated Applications first appeared on RISC2 Project.

]]>The post Costa Rica HPC School 2023 aimed at teaching the fundamental tools and methodologies in parallel programming first appeared on RISC2 Project.

]]>Building on the success of previous editions, the seventh installment of the Costa Rica High Performance Computing School (CRHPCS) aims at preparing students and researchers to introduce HPC tools in their workflows. A selected team of international experts taught sessions on shared-memory programming, distributed-memory programming, accelerator programming, and high performance computing. This edition had instructors Alessandro Marani and Nitin Shukla from CINECA, which greatly helped in bringing a vibrant environment to the sessions.

Bernd Mohr, from Jülich Supercomputing Centre, was the Keynote Speaker of this year’s edition of the event. A well-known figure in the HPC community at large, Bernd presented the talk Parallel Performance Analysis at Scale: From Single Node to one Million HPC Cores. In an amazing voyage through different architecture setups, Bernd highlighted the importance and challenges of performance analysis.

For Esteban Meneses, Costa Rica HPC School General Chair, the School is a key element in building a stronger and more connected HPC community in the region. This year, thanks to the RISC2 project, we were able to gather participants from Guatemala, El Salvador, and Colombia. Creating these ties is fundamental for later developing more complex initiatives. We aim at preparing future scientists that will develop groundbreaking computer applications that tackle the most pressing problems of our region.

The post Costa Rica HPC School 2023 aimed at teaching the fundamental tools and methodologies in parallel programming first appeared on RISC2 Project.

]]> RISC2 is supporting the organization of the HPC, Data & Architecture Week, which will take place between March 13-17, 2023. It is a comprehensive school of High Performance Computing (HPC), new computing architectures and large volumes of data processing. The simultaneous realization of five tracks aim at different training and needs of undergraduate and postgraduate […]

RISC2 is supporting the organization of the HPC, Data & Architecture Week, which will take place between March 13-17, 2023. It is a comprehensive school of High Performance Computing (HPC), new computing architectures and large volumes of data processing. The simultaneous realization of five tracks aim at different training and needs of undergraduate and postgraduate […]

The post HPC, Data & Architecture Week first appeared on RISC2 Project.

]]>It is a comprehensive school of High Performance Computing (HPC), new computing architectures and large volumes of data processing. The simultaneous realization of five tracks aim at different training and needs of undergraduate and postgraduate students, as well as cluster administrators.

This school hopes to provide this scope of diffusion and approach to users and administrators of HPC equipment in the country. They plan to give specific technical courses for each audience and common activities that promote interaction between them.

Know more information about the school here.

The post HPC, Data & Architecture Week first appeared on RISC2 Project.

]]>The post JUPITER Ascending – First European Exascale Supercomputer Coming to Jülich first appeared on RISC2 Project.

]]>The computer named JUPITER (short for “Joint Undertaking Pioneer for Innovative and Transformative Exascale Research”) will be installed 2023/2024 on the campus of Forschungszentrum Jülich. It is intended that the system will be operated by the Jülich Supercomputing Centre (JSC), whose supercomputers JUWELS and JURECA currently rank among the most powerful in the world. JSC has participated in the application procedure for a high-end supercomputer as a member of the Gauss Centre for Supercomputing (GCS), an association of the three German national supercomputing centres JSC in Jülich, High Performance Computing Stuttgart (HLRS), and Leibniz Computing Centre (LRZ) in Garching. The competition was organized by the European supercomputing initiative EuroHPC JU, which was formed by the European Union together with European countries and private companies.

JUPITER is now set to become the first European supercomputer to make the leap into the exascale class. In terms of computing power, it will be more powerful that 5 million modern laptops of PCs. Just like Jülich’s current supercomputer JUWELS, JUPITER will be based on a dynamic, modular supercomputing architecture, which Forschungszentrum Jülich developed together with European and international partners in the EU’s DEEP research projects.

In a modular supercomputer, various computing modules are coupled together. This enables program parts of complex simulations to be distributed over several modules, ensuring that the various hardware properties can be optimally utilized in each case. Its modular construction also means that the system is well prepared for integrating future technologies such as quantum computing or neurotrophic modules, which emulate the neural structure of a biological brain.

Figure Modular Supercomputing Architecture: Computing and storage modules of the exascale computer in its basis configuration (blue) as well as optional modules (green) and modules for future technologies (purple) as possible extensions.

In its basis configuration, JUPITER will have and enormously powerful booster module with highly efficient GPU-based computation accelerators. Massively parallel applications are accelerated by this booster in a similar way to a turbocharger, for example to calculate high-resolution climate models, develop new materials, simulate complex cell processes and energy systems, advanced basic research, or train next-generation, computationally intensive machine-learning algorithms.

One major challenge is the energy that is required for such large computing power. The average power is anticipated to be up to 15 megawatts. JUPITER has been designed as a “green” supercomputer and will be powered by green electricity. The envisaged warm water cooling system should help to ensure that JUPITER achieves the highest efficiency values. At the same time, the cooling technology opens up the possibility of intelligently using the waste heat that is produced. For example, just like its predecessor system JUWELS, JUPITER will be connected to the new low-temperature network on the Forschungszentrum Jülich campus. Further potential applications for the waste heat from JUPITER are currently being investigated by Forschungszentrum Jülich.

By Jülich Supercomputing Centre (JSC)

The first image is JUWELS: Germany’s fastest supercomputer JUWELS at Forschungszentrum Jülich, which is funded in equal parts by the Federal Ministry of Education and Research (BMBF) and the Ministry of Culture and Science of the State of North Rhine-Westphalia (MKW NRW) via the Gauss Centre for Supercomputing (GCS). (Copyright: Forschungszentrum Jülich / Sascha Kreklau)

The post JUPITER Ascending – First European Exascale Supercomputer Coming to Jülich first appeared on RISC2 Project.

]]>The post RISC2 supported ACM Europe Summer School 2022 first appeared on RISC2 Project.

]]>The RISC2 project supported the participation of five Latin American students, boosting the exchange of experience and knowledge between Europe and Latin America on the HPC fields. After the Summer School, the students whose participation supported by RISC2 wrote on a blog post: “We have brought home a new vision of the world of computing, new contacts, and many new perspectives that we can apply in our studies and share with our colleagues in the research groups and, perhaps, start a new foci of study”.

Distinguished scientists in the HPC field gave lectures and tutorials addressing architecture, software stack and applications for HPC and AI, invited talks, a panel on The Future of HPC and a final keynote by Prof Mateo Valero. On the last day of the week, the ACM School merged with MATEO2022 (“Multicore Architectures and Their Effective Operation 2022”), attended by world-class experts in computer architecture in the HPC field.

The ACM Europe Summer School joined 50 participants, from 28 different countries, from young computer science researchers and engineers, outstanding MSC students, and senior undergraduate students.

The post RISC2 supported ACM Europe Summer School 2022 first appeared on RISC2 Project.

]]>